Determine 1: Step-by-step conduct in self-supervised studying. When coaching a typical SSL algorithm, we discover that the loss progressively decreases (prime left), and the dimensionality of the realized embeddings iteratively will increase (backside left). A direct visualization of the embedding (proper; exhibiting the highest three PCA instructions) confirms that the embedding initially collapses to a degree after which expands right into a 1D manifold, a 2D manifold, and so forth concurrently with the loss step.

It’s broadly believed that deep studying’s astonishing success is due partly to its potential to find and extract helpful representations of advanced knowledge. Self-supervised studying (SSL) has turn into a number one framework for studying picture representations instantly from unlabeled knowledge, just like how LL.M.s study language representations by scraping textual content instantly from the net. Nevertheless, regardless of SSL enjoying a key function in state-of-the-art fashions akin to CLIP and MidJourney, elementary questions akin to “What do self-supervised picture techniques actually study?” stay. and “How does this studying really occur?” Fundamental solutions are missing.

Our current paper (to be printed at ICML 2023) units out our suggestions: The primary compelling mathematical diagram of the coaching strategy of a large-scale SSL technique. Our exactly solved, simplified theoretical mannequin learns every facet of the info by way of a sequence of discrete, impartial steps. We then present that this conduct may be noticed in lots of present state-of-the-art techniques. This discovery opens new avenues for enhancing SSL strategies and addresses a brand new set of scientific questions that, if answered, will present a strong perspective on understanding a few of as we speak’s most necessary deep studying techniques.

background

We focus right here on joint embedding SSL strategies—a superset of contrasting strategies—which study representations that adhere to the view invariance criterion. The loss operate for these fashions features a time period that forces matching embeddings to semantically equal “views” of the picture. Remarkably, this straightforward strategy can produce highly effective representations on imaging duties, even when it is so simple as random cropping and coloration perturbation of the picture.

Principle: Studying SSL step-by-step utilizing linear fashions

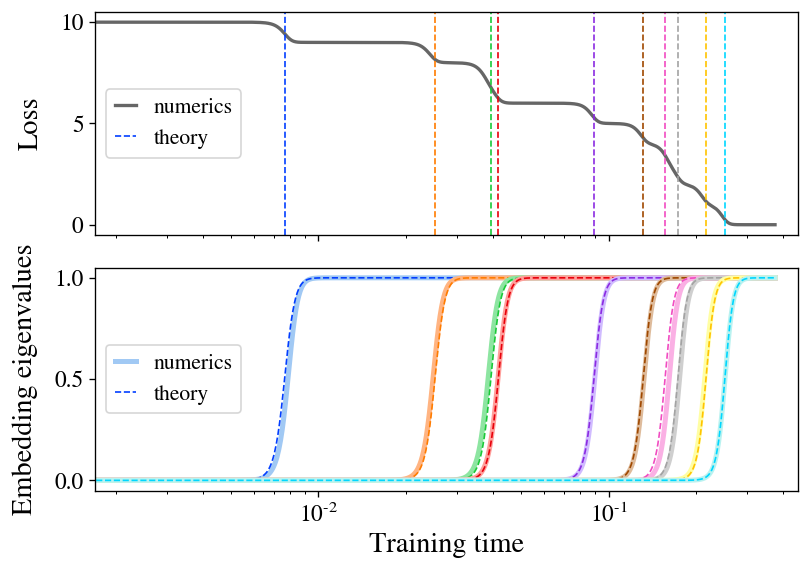

We first describe an precisely solvable linear mannequin for SSL, the place each coaching trajectories and remaining embeddings may be written in closed kind. Remarkably, we discover that illustration studying happens in a sequence of discrete steps: the extent of embedding begins small and will increase iteratively throughout a step-by-step studying course of.

The principle theoretical contribution of our paper is to precisely clear up the coaching dynamics of the gradient stream Barlow twins loss operate within the particular case of linear fashions (mathbf{f}(mathbf{x}) = mathbf{W } mathbf{x} ).To summarize our findings right here, we discover that when initialized small, the mannequin learns a illustration consisting precisely of the top-(d) function instructions Options Cross-correlation matrix(boldsymbol{Gamma} equiv mathbb{E}_{mathbf{x},mathbf{x}’} [ mathbf{x} mathbf{x}’^T ]).Extra importantly, we discover that these function instructions are realized separately A sequence of discrete studying steps generally decided by their corresponding function values. Determine 2 illustrates this studying course of, exhibiting the expansion of latest instructions of the represented operate and the lower of the loss at every studying step. As an added bonus, we discover the closed-form equation for the ultimate embedding realized by the mannequin when it converges.

Determine 2: Stepwise studying happens in SSL linear mannequin. We practice a linear mannequin with Barlow Twins loss on small samples of CIFAR-10. The loss (prime) decreases in a stepwise method, and the step time is nicely predicted by our concept (dashed line). Embedded eigenvalues (backside) seem separately, intently matching concept (dashed line).

Our findings on stepwise studying replicate the broader idea Spectral deviation, which is the commentary that many studying techniques with roughly linear dynamics preferentially study function instructions with increased eigenvalues. This has just lately been studied intensively within the context of ordinary supervised studying, the place it was discovered that increased eigenvalue function patterns may be realized extra shortly throughout coaching. Our work discovered comparable outcomes for SSL.

The explanation linear fashions deserve a more in-depth look is that sufficiently vast neural networks even have linear parameter dynamics, as proven within the Neural Tangential Core (NTK) line of labor. This truth is enough to increase our linear mannequin resolution to a variety of neural networks (or certainly to arbitrary core machines), through which case the mannequin preferentially learns the highest of particular operators related to NTK(d ) Eigendirection. NTK’s analysis has yielded many insights into the coaching and generalization of nonlinear neural networks, and this can be a clue that maybe among the insights we glean could also be transferable to real-world instances.

Experiment: Step-by-step studying in SSL utilizing ResNets

As our fundamental experiment, we skilled a number of main SSL strategies utilizing the full-scale ResNet-50 encoder and located that we are able to clearly see this stepwise studying sample even in real-world settings, suggesting that this Conduct is on the core of SSL studying conduct.

To see the progressive studying of ResNet in an actual setting, all we’ve to do is run the algorithm and monitor the eigenvalues of the embedding covariance matrix over time. In apply, it helps to spotlight stepwise conduct and likewise to coach from initializations smaller than regular parameters and with smaller studying charges, so we’ll use these modifications within the experiments mentioned right here and in Focus on customary conditions in our newspapers.

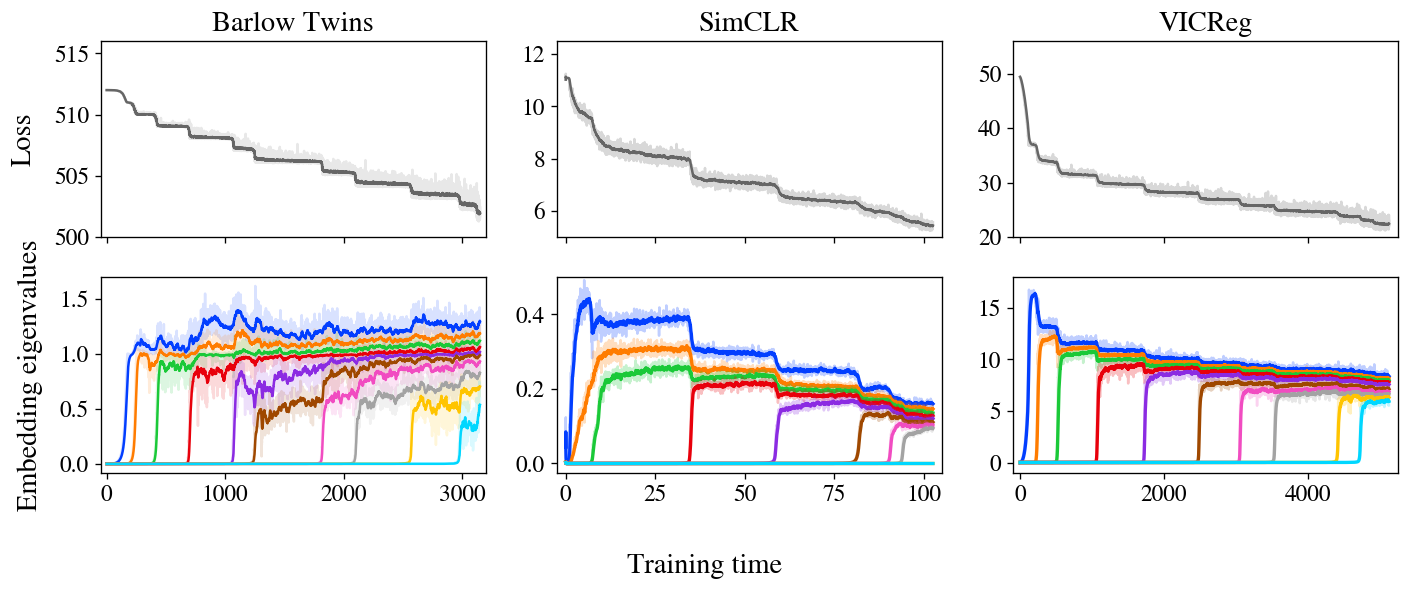

Determine 3: Stepwise studying is obvious in Barlow Twins, SimCLR and VICReg. The losses and embeddings of all three strategies present stepwise studying, with the rating of the embeddings iteratively rising as predicted by our mannequin.

Determine 3 exhibits the loss and embedding covariance eigenvalues of three SSL strategies (Barlow Twins, SimCLR and VICReg) skilled on the STL-10 dataset with customary augmentation. It’s value noting that All three present very clear step-by-step studying, The loss decreases in a step curve, with every subsequent step producing a brand new function worth from zero. We additionally present an animated zoom-in of the Barlow Twins’ early steps in Determine 1.

It is value noting that whereas these three strategies seem fairly totally different at first look, folklore has for a while suspected that they’re doing comparable issues behind the scenes. Particularly, these and different collectively embedded SSL strategies obtain comparable efficiency on benchmark duties. The problem, then, is to determine the frequent behaviors underlying these totally different approaches. A lot earlier theoretical work has centered on the analytical similarity of loss capabilities, however our experiments counsel a unique unifying precept: SSL strategies all study one-dimensional embeddings at a time, and iteratively add new dimensions in salient order.

In a remaining, fledgling however promising experiment, we in contrast the actual embeddings realized by these strategies with the theoretical predictions computed from NTK after coaching. Not solely do we discover good settlement between concept and experiment in every technique, however we additionally evaluate the strategies and discover that totally different strategies study comparable embeddings, which gives the idea that these strategies finally do comparable issues and may be unified Further assist supplied.

why that is necessary

Our work paints a primary theoretical image of the method by which SSL strategies assemble realized representations throughout coaching. Now that we’ve a concept, what can we do with it? We imagine this diagram can’t solely assist SSL apply from an engineering perspective, but additionally present a greater understanding of SSL and probably illustration studying extra broadly.

On the sensible facet, it’s identified that SSL fashions are slower to coach than supervised coaching, and the explanations for this distinction are unclear. Our coaching plots present that SSL coaching takes a very long time to converge as a result of the latter eigenmodes have very long time constants and take a very long time to develop considerably. If this image is right, then accelerating coaching is so simple as selectively focusing gradients in small embedding eigendirections, attempting to tug them out to stage in different instructions, in precept with a easy change to the loss operate Modifier or optimizer. We focus on these prospects in additional element in our paper.

In scientific phrases, the framework of SSL as an iterative course of permits one to ask many questions on particular person eigenmodes. Is studying first extra helpful than studying later? How do totally different enhancements change the educational sample, and does it depend upon the particular SSL technique used? Can we assign semantic content material to any eigenmode (subset)? (For instance, we be aware that the primary few patterns realized generally symbolize extremely interpretable capabilities, akin to the typical hue and saturation of a picture.) If different types of illustration realized converge to comparable representations (a truth that’s simply examined) , then the solutions to those questions could have implications for deep studying extra broadly.

All issues thought-about, we’re optimistic concerning the prospects for future work on this space. Deep studying stays an enormous theoretical thriller, however we imagine our findings present a helpful foothold for future research of the educational conduct of deep networks.

This text relies on the paper “On the Stepwise Nature of Self-Supervised Studying” with Maksis Knutins, Liu Ziyin, Daniel Geisz, and Joshua Albrecht. The work was performed by Common Intelligence, the place Jamie Simon serves as a researcher. This text is cross-posted right here. We’ll be comfortable to reply your questions or feedback.