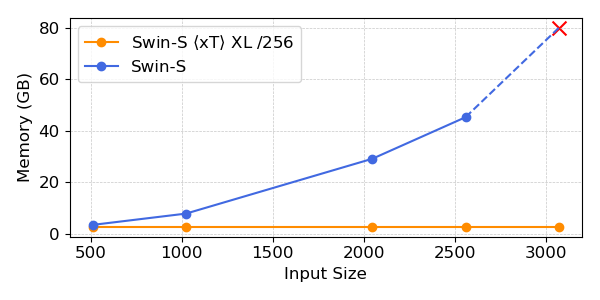

As pc imaginative and prescient researchers, we consider each pixel tells a narrative. Nonetheless, that is one space the place writers appear to hit a wall in relation to working with giant photos. Giant photos are now not unusual—the cameras we feature in our pockets and people orbiting the Earth can take photographs so giant and detailed that they stretch our greatest present fashions and {hardware} to their limits when processing them. Usually, we face a quadratic enhance in reminiscence utilization as a operate of picture dimension.

Immediately, we make considered one of two suboptimal selections when processing giant photos: downsampling or cropping. Each strategies lead to a major lack of the quantity of data and context current within the picture. We revisit these strategies and introduce $x$T, a brand new framework that allows end-to-end modeling of enormous photos on up to date GPUs whereas effectively aggregating international context with native particulars.

The structure of the $x$T framework.

Why trouble with giant photos anyway?

Why trouble with giant photos? Image your self in entrance of the TV watching your favourite soccer crew. The sector is stuffed with gamers, taking motion on solely a small portion of the display at a time. However would you be glad when you might solely see a small space round the place the ball at the moment is? Or are you content material with watching the sport in low decision? Each pixel tells a narrative, irrespective of how far aside they’re. That is true in the whole lot from tv screens to pathologists taking a look at gigapixel slides to diagnose tiny patches of most cancers. These photos are a treasure trove of data. What is the level if we won’t absolutely discover the riches as a result of our instruments cannot deal with the map?

Sports activities are enjoyable when what is going on on.

That is what’s so irritating about as we speak. The bigger the picture, the extra we have to concurrently zoom out to see your complete picture and zoom in to see substantial particulars, making mastering each the forest and the bushes a problem. Most present approaches pressure folks to decide on between neglecting forests and neglecting bushes, neither of which is an efficient choice.

How $x$T tries to resolve this downside

Think about making an attempt to resolve a large jigsaw puzzle. Fairly than tackling your complete downside directly, which might be overwhelming, you begin with smaller components, look carefully at every half, and work out how they match into the bigger picture. That is principally how we use $x$T to course of giant photos.

$x$T takes these big photos and slices them hierarchically into smaller, extra digestible components. Nevertheless it’s not nearly making issues smaller. It is about understanding every half by itself after which, utilizing some intelligent strategies, determining how these components join on a bigger scale. It is like having a dialog with every a part of the picture, understanding its story, after which sharing these tales with different components to get a whole narrative.

Nested tokenization

On the coronary heart of $x$T is the idea of nested tokenization. Merely put, tokenization in pc imaginative and prescient is akin to chopping a picture into items (tokens) {that a} mannequin can digest and analyze. Nonetheless, $x$T goes a step additional and introduces a hierarchy into the method, in order that Nested.

Think about you’re tasked with analyzing an in depth map of a metropolis. As a substitute of making an attempt to get your complete map directly, you break it down into areas, then neighborhoods inside these areas, and eventually streets inside these neighborhoods. This hierarchical breakdown makes it simpler to handle and perceive the main points of the map whereas protecting monitor of the place the whole lot suits inside the bigger picture. That is the essence of nested tokenization – we section the picture into a number of areas, and every area might be additional segmented into sub-regions primarily based on the anticipated enter dimension of the visible spine (which we name the visible spine). space encoder) after which patched to be processed by the area encoder. This nested strategy permits us to extract options at completely different scales on the native degree.

Coordinate area and context encoders

As soon as a picture is neatly divided into tokens, $x$T makes use of two varieties of encoders to know the segments: area encoders and contextual encoders. Every particular person performs a novel function in piecing collectively the entire story of the picture.

The area encoder is an unbiased “native skilled” that converts unbiased areas into detailed representations. Nonetheless, since every area is processed individually, no data is shared throughout your complete picture. The area encoder might be any state-of-the-art imaginative and prescient spine. In our experiments we used hierarchical visible transformers (corresponding to Swin and Hiera) and CNNs (corresponding to ConvNeXt!)

Enter the Context Encoder, the grasp of the massive image. Its job is to take detailed representations from area encoders and sew them collectively, making certain that insights from one token are thought of within the context of different tokens. Context encoders are usually lengthy sequence fashions.We conduct experiments with Transformer-XL (our variant is known as overtake) and Mamba, though you need to use Longformer and different new developments on this space. Though these lengthy sequence fashions are usually designed for language, we present that they are often successfully used for imaginative and prescient duties.

The magic of $x$T lies in how these parts (nested tokenization, area encoder, and context encoder) match collectively.By first breaking down the picture into manageable components, after which systematically analyzing these components individually and collectively, $x$T manages to keep up the constancy of the unique picture element whereas additionally integrating distant context into the general context Concurrently match large photos end-to-end on fashionable GPUs.

consequence

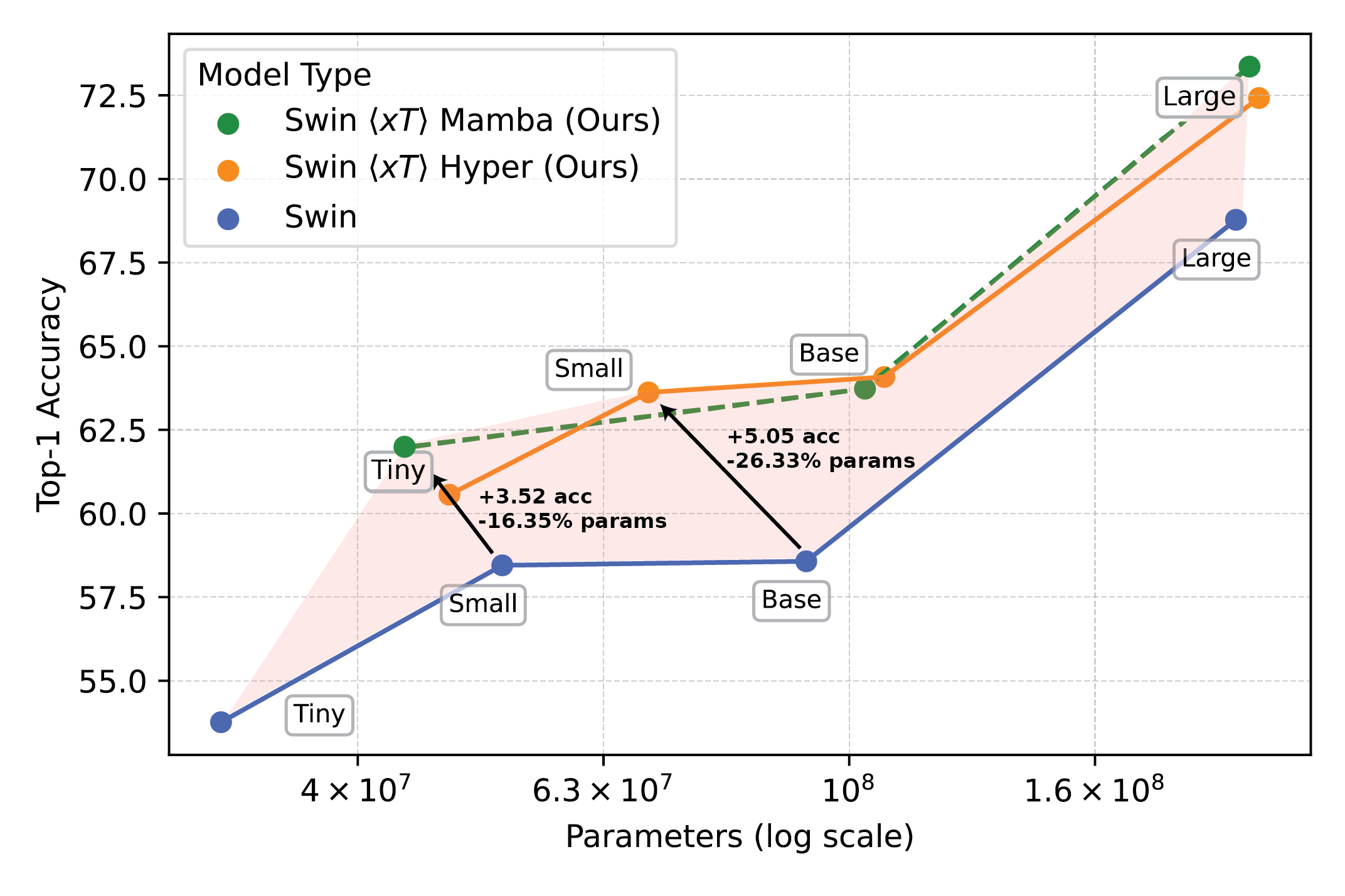

We consider $x$T on difficult benchmark duties starting from well-established pc imaginative and prescient baselines to rigorous large-scale imaging duties. Specifically, we try to make use of iNaturalist 2018 for fine-grained species classification, xView3-SAR for context-sensitive segmentation, and MS-COCO for detection.

The highly effective visible mannequin used with $x$T opens new frontiers for downstream duties corresponding to fine-grained species classification.

Our experiments present that $x$T can obtain greater accuracy on all downstream duties utilizing fewer parameters whereas utilizing considerably much less reminiscence per area than state-of-the-art baselines*. We had been capable of mannequin photos as giant as 29,000 x 25,000 pixels on the 40GB A100, whereas the same baseline solely exhausted reminiscence at 2,800 x 2,800 pixels.

The highly effective visible mannequin used with $x$T opens new frontiers for downstream duties corresponding to fine-grained species classification.

*Is determined by the context mannequin you select, e.g. Transformer-XL.

Why this issues greater than you assume

Not solely is that this strategy cool; Is important. It is a game-changer for scientists monitoring local weather change or docs diagnosing illness. This implies making a mannequin that understands the entire story quite than fragments. For instance, in environmental monitoring, with the ability to see each broader adjustments in a hard and fast panorama and the main points of particular areas can assist perceive the general image of local weather impacts. In healthcare, this might imply whether or not a illness is caught early.

We don’t declare to have solved all of the world’s issues in a single fell swoop. We hope that by way of $x$T we’ve got opened the door to chance. We’re coming into a brand new period the place we shouldn’t have to compromise on the readability or breadth of our imaginative and prescient. $x$T is a giant step in the direction of a mannequin that may deal with advanced, giant imagery effortlessly.

There’s nonetheless quite a bit to cowl. Analysis will proceed to evolve, and hopefully our capacity to course of bigger and extra advanced photos will even proceed to evolve. Actually, we’re creating a follow-up to $x$T that can additional increase this space.

In abstract

For a whole therapy of this work, try the paper on arXiv. The mission web page incorporates hyperlinks to our printed code and weights. For those who discover this work helpful, please cite it as follows:

@article{xTLargeImageModeling,

title={xT: Nested Tokenization for Bigger Context in Giant Photos},

creator={Gupta, Ritwik and Li, Shufan and Zhu, Tyler and Malik, Jitendra and Darrell, Trevor and Mangalam, Karttikeya},

journal={arXiv preprint arXiv:2403.01915},

yr={2024}

}