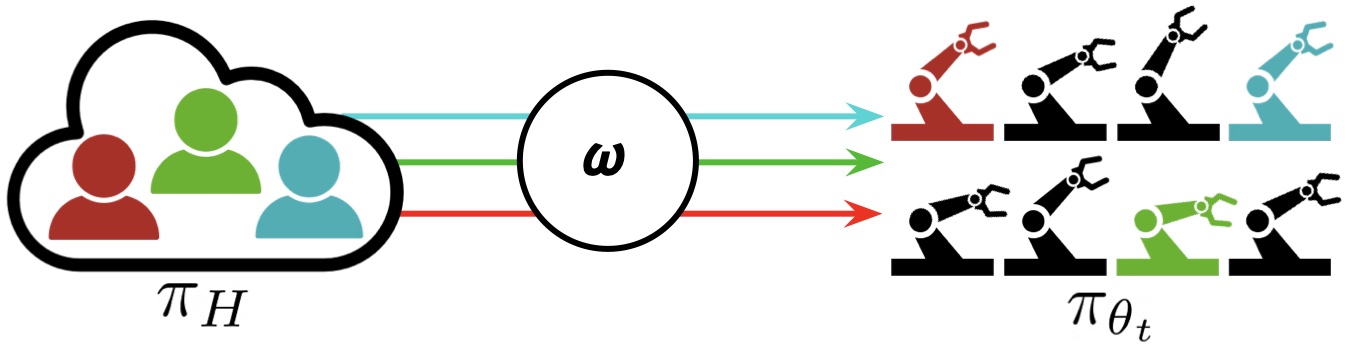

Determine 1: Interactive Fleet Studying (IFL) refers to fleets of robots in trade and academia that depend on human distant operators when obligatory and study from them over time.

Over the previous few years, we now have seen thrilling developments within the area of robotics and synthetic intelligence: a lot of robots have left the laboratory and entered the actual world. Waymo, for instance, operates greater than 700 self-driving automobiles in Phoenix and San Francisco and is presently increasing to Los Angeles. Different industrial deployments of robotic fleets embody functions resembling e-commerce order achievement from Amazon and Ambi Robotics and meals supply from Nuro and Kiwibot.

Business and industrial deployments of robotic fleets: bundle supply (prime left), meals supply (backside left), e-commerce order achievement by Ambi Robotics (prime proper), Waymo’s autonomous taxis (backside proper).

These robots leverage latest advances in deep studying to function autonomously in unstructured environments. By pooling information from all robots in a fleet, all the fleet can successfully study from every robotic’s expertise. As well as, as a result of advances in cloud robotics know-how, fleets can offload information, reminiscence and computing (resembling coaching of enormous fashions) to the cloud through the Web. This method is known as “fleet studying,” a time period popularized by Elon Musk in a 2016 press launch about Tesla Autopilot and utilized in information communications by Toyota Analysis Institute, Wayve AI, and others.A robotic fleet is an analogue of a contemporary ship fleet, through which the time period fleet The etymology will be traced again to fleet (“ship”) and fleet (“float”) in Previous English.

Nonetheless, data-driven strategies like fleet studying face a “lengthy tail” downside: robots inevitably encounter new eventualities and edge instances that aren’t represented within the dataset. In fact, we can’t count on the longer term to be the identical because the previous! So how do these robotics corporations guarantee their providers are dependable?

One reply is to depend on distant people on the community who can interactively management and “remotely function” the system when robotic methods are unreliable throughout process execution. Distant operation has a protracted historical past in robotics: the world’s first robots have been remotely operated to deal with radioactive supplies throughout World Battle II, and Telegarden pioneered robotic management over the Web in 1994. By means of steady studying, human distant operation information from these interventions can iteratively enhance robotic methods over time, lowering the robotic’s reliance on human supervisors.Moderately than discrete jumps to full robotic autonomy, this technique offers a steady various that approaches full autonomy over time whereas reaching robotic system reliability. at this time.

Utilizing human teleoperators as a fallback mechanism is more and more well-liked amongst trendy robotics corporations: Waymo calls it “Fleet Response,” Zoox calls it “distance guidance”, Amazon calls this “steady studying.” Final yr, a distant driving software program platform known as Phantom Auto was named one of many prime ten innovations of 2022 by Time journal. Simply final month, John Deere acquired SparkAI, a startup growing software program to resolve edge instances in people. go.

The distant human operator of Phantom Auto, a software program platform that allows distant driving over the Web.

Nonetheless, regardless of this rising pattern in trade, comparatively little consideration has been paid to this matter in academia. In consequence, robotics corporations have needed to depend on advert hoc options to find out when their robots ought to relinquish management. The closest analogue in academia is Interactive Imitation Studying (IIL), a paradigm through which a robotic intermittently palms over management to a human supervisor and learns from these interventions over time. Many IIL algorithms for single-robot, single-person environments have emerged lately, together with DAgger and its variants, resembling HG-DAgger, SafeDAgger, EnsembleDAgger, and ThriftyDAgger; nevertheless, when and easy methods to change between robotic and human management stays unclear is an open query. That is even much less understood when the idea is prolonged to fleets of robots with a number of robots and a number of human supervisors.

IFL formalism and algorithm

To this finish, in a latest paper on the Robotic Studying Convention we launched the next paradigm Interactive Fleet Studying (IFL), the primary type of interactive studying with a number of robots and a number of people within the literature. As we are able to see, this phenomenon is already occurring in trade, and we are able to now use the time period “interactive fleet studying” as a unifying time period for robotic fleet studying that depends on human management quite than monitoring every particular person enterprise resolution. Title (“Fleet Response”, “Distant Steerage”, and so on.). IFL expands the scope of robotic studying by means of 4 key parts:

- Supervision as wanted. Since people can’t successfully monitor the execution of a number of robots on the identical time and are liable to fatigue, the allocation of robots to people in IFL is mechanically accomplished by means of some allocation methods $omega$. Robots request supervision “on demand” quite than inserting the burden of fixed monitoring on people.

- Fleet supervision. On-demand supervision can successfully allocate restricted human consideration to giant fleets of robots. IFL permits robots to considerably outnumber people (e.g., by 10:1 or extra).

- Steady studying. Every robotic in a fleet can study from its personal errors in addition to these of different robots, permitting the quantity of human supervision required to lower over time.

- web. Because of mature and always enhancing community know-how, human supervisors don’t should be bodily current. Fashionable laptop networks can notice real-time distant distant management operation at a set distance.

Within the interactive fleet studying (IFL) paradigm, M people are assigned to probably the most needy robotic in a fleet of N robots (the place N will be a lot bigger than M). The robots share insurance policies $pi_{theta_t}$ and study from human intervention over time.

We assume that robots share a standard management technique $pi_{theta_t}$ and people share a standard management technique $pi_H$. We additionally assume that the robotic operates in an impartial setting with the identical state and motion area (however completely different states).completely different from robots group or sometimes low-cost robots that coordinate in a shared setting to realize a standard objective, e.g. fleet Execute shared insurance policies concurrently in numerous parallel environments (e.g., completely different containers on an meeting line).

The objective of IFL is to seek out an optimum supervisor allocation technique $omega$, which is a mapping of $mathbf{s}^t$ (the state of all robots at the moment) t) and the sharing coverage $pi_{theta_t}$ to a binary matrix indicating which individual is assigned to which robotic at a sure time t. The IFL goal is a novel metric we name Return on Human Sources (ROHE):

[max_{omega in Omega} mathbb{E}_{tau sim p_{omega, theta_0}(tau)} left[frac{M}{N} cdot frac{sum_{t=0}^T bar{r}( mathbf{s}^t, mathbf{a}^t)}{1+sum_{t=0}^T |omega(mathbf{s}^t, pi_{theta_t}, cdot) |^2 _F} right]]

the place the numerator is the whole reward for the robotic and time step, and the denominator is the whole quantity of human habits for the robotic and time step. Intuitively, ROHE measures fleet efficiency by the whole manpower supervision required. See the paper for extra mathematical particulars.

Utilizing this formalism, we are able to now instantiate and examine IFL algorithms (i.e., allocation methods) in a principled method. We suggest a collection of IFL algorithms known as Fleet-DAgger, through which the coverage studying algorithm is interactive imitation studying, and every Fleet-DAgger algorithm consists of a novel precedence operate $hat p: (s, pi_{theta_t}) parameterized rightarrow[0infty)$UsedbyeachrobotinthefleettoassignitselfapriorityscoreSimilartoschedulingtheoryrobotswithhigherprioritiesaremorelikelytoreceivehumanattentionFleet-DAggerisgeneralenoughtomodelavarietyofIFLalgorithmsincludingIFLadaptationsofexistingsingle-robotsingle-personIILalgorithmssuchasEnsembleDAggerandThriftyDAggerNotehoweverthattheIFLformisnotlimitedtoFleet-DAgger:forexamplereinforcementlearningalgorithmssuchasPPOcanbeusedtoperformpolicylearning[0infty)$thateachrobotinthefleetusestoassignitselfapriorityscoreSimilartoschedulingtheoryhigherpriorityrobotsaremorelikelytoreceivehumanattentionFleet-DAggerisgeneralenoughtomodelawiderangeofIFLalgorithmsincludingIFLadaptationsofexistingsingle-robotsingle-humanIILalgorithmssuchasEnsembleDAggerandThriftyDAggerNotehoweverthattheIFLformalismisn’tlimitedtoFleet-DAgger:policylearningcouldbeperformedwithareinforcementlearningalgorithmlikePPOforinstance[0infty)$艦隊中的每個機器人使用它來為自己分配優先級分數。與調度理論類似,優先順序較高的機器人更有可能受到人類的關注。Fleet-DAgger足夠通用,可以對各種IFL演算法進行建模,包括現有單機器人、單人IIL演算法(例如EnsembleDAgger和ThriftyDAgger)的IFL改編。但請注意,IFL形式並不限於Fleet-DAgger:例如,可以使用PPO等強化學習演算法來執行策略學習。[0infty)$thateachrobotinthefleetusestoassignitselfapriorityscoreSimilartoschedulingtheoryhigherpriorityrobotsaremorelikelytoreceivehumanattentionFleet-DAggerisgeneralenoughtomodelawiderangeofIFLalgorithmsincludingIFLadaptationsofexistingsingle-robotsingle-humanIILalgorithmssuchasEnsembleDAggerandThriftyDAggerNotehoweverthattheIFLformalismisn’tlimitedtoFleet-DAgger:policylearningcouldbeperformedwithareinforcementlearningalgorithmlikePPOforinstance

IFL benchmarks and experiments

With a purpose to decide easy methods to greatest allocate restricted human consideration to giant fleets of robots, we’d like to have the ability to empirically consider and examine completely different IFL algorithms. To this finish, we introduce IFL Benchmark, an open supply Python toolkit out there on Github to facilitate the event and standardized analysis of latest IFL algorithms. We lengthen NVIDIA Isaac Gymnasium, a extremely optimized software program library for end-to-end GPU-accelerated robotic studying launched in 2021. With out it, simulations of a whole bunch or 1000’s of studying robots might be computationally intractable. problem.Utilizing the IFL benchmark, we carry out large-scale simulation experiments A = 100 robots, rice = 10 algorithmic people, 5 IFL algorithms, and three high-dimensional steady management environments (Determine 1, left).

We additionally consider the IFL algorithm in real-world image-based block pushing duties. A = 4 robotic arms and rice = 2 distant human teleoperators (Fig. 1, proper). These 4 arms belong to 2 two-handed ABB YuMi robots, which run concurrently in two impartial laboratories about 1 km aside. Distant personnel within the third bodily location can carry out distant operations by means of the keyboard interface as required. Every robotic pushes a dice towards randomly sampled distinctive goal places within the workspace; these targets are programmatically generated from the robotic’s top-down picture observations and are mechanically resampled when earlier targets are reached. The bodily experiment outcomes present tendencies which can be broadly in line with these noticed within the baseline setting.

Highlights and future instructions

To handle the hole between idea and observe of robotic fleet studying and stimulate future analysis, we introduce new formalisms, algorithms, and benchmarks for interactive fleet studying. Since IFL doesn’t prescribe a selected kind or structure of a shared robotic management technique, it may be flexibly synthesized with different promising analysis instructions. For instance, diffusion methods which have lately been proven to deal with multimodal profiles elegantly might be utilized in IFL to permit for heterogeneous human regulatory methods. Moreover, multi-task language-conditioned Transformers like RT-1 and PerAct can turn into efficient “information sponges”, enabling robots in a fleet to carry out heterogeneous duties regardless of sharing a single technique. The techniques facet of IFL is one other compelling analysis path: latest developments in cloud and fog robotics allow fleets of robots to dump all supervisor assignments, mannequin coaching, and crowdsourced distant operations into the cloud with minimal community latency. on a centralized server.

Whereas the Moravec Paradox has thus far prevented robotics and embodied synthetic intelligence from totally having fun with the latest great success of enormous language fashions (LLMs) resembling GPT-4, the “exhausting lesson” of LLMs is that supervised studying at unprecedented scale finally results in achieved this end result. Rising properties we noticed. Since we don’t but have almost as wealthy a group of robotic management information as all of the written and picture materials on the internet, the IFL paradigm offers a path ahead for scaling supervised robotic studying and reliably deploying fleets of robots in at this time’s world.

Acknowledgments

This text relies on the paper “Fleet-DAgger: Interactive Robotic Fleet Studying with Scalable Human Supervision” revealed on the sixth Annual Convention on Robotic Studying (CoRL) in Auckland, New Zealand, in December 2022. The analysis was carried out at UC Berkeley’s AUTOLab, a part of the Berkeley Synthetic Intelligence Analysis (BAIR) Laboratory and the CITRIS People and Robots (CPAR) program. The authors have been supported partly by donations from Google, Siemens, Toyota Analysis Institute, and Autodesk, and tools grants from PhotoNeo, NVidia, and Intuitive Surgical. Any opinions, findings, conclusions, or suggestions expressed are these of the authors and don’t essentially mirror the views of the sponsor. Because of co-authors Lawrence Chen, Satvik Sharma, Karthik Dharmarajan, Brijen Thananjeyan, Pieter Abbeel, and Ken Goldberg for his or her contributions to this work and useful suggestions.

For extra particulars on interactive fleet studying, see the paper on arXiv, the CoRL demo video on YouTube, the open supply code repository on Github, High-level summary on Twitter and the venture web site.

If you need to quote this text, please use the next bibtex:

@article{ifl_blog,

title={Interactive Fleet Studying},

creator={Hoque, Ryan},

url={https://bair.berkeley.edu/weblog/2023/04/06/ifl/},

journal={Berkeley Synthetic Intelligence Analysis Weblog},

yr={2023}

}