Whole size DR: we propose Robustness of uneven authentication downside, requiring just one class to be licensed sturdy and mirror real-world adversarial eventualities. This centralized setting allows us to introduce feature-convex classifiers that produce millisecond-level closed-form and deterministic authentication radii.

Determine 1. Illustration of a characteristic convex classifier and its authentication for delicate class inputs. This structure consists of a Lipschitz steady characteristic map $varphi$ and a realized convex operate $g$. Since $g$ is convex, its tangent aircraft at $varphi(x)$ is globally underestimated, leading to an authenticated norm ball within the characteristic area. The Lipschitzness of $varphi$ then produces appropriately scaled credentials within the unique enter area.

Though deep studying classifiers are broadly used, they’re nonetheless very vulnerable to Adversarial examples: Tiny picture perturbations which are imperceptible to people can trick machine studying fashions into misclassifying modified inputs. This weak point severely compromises the reliability of safety-critical processes that incorporate machine studying. Many empirical defenses towards adversarial perturbations have been proposed, solely to be later defeated by extra highly effective assault methods.Due to this fact we deal with Provably sturdy classifierwhich supplies a mathematical assure that their predictions will stay unchanged for a $ell_p$-norm sphere across the enter.

Conventional robustness certification strategies undergo from a sequence of shortcomings, together with non-determinism, gradual execution, poor scalability, and certification towards just one assault specification. We imagine these points will be addressed by bettering licensed robustness inquiries to make them extra in line with reasonable adversarial environments.

Uneven Sturdy Authentication Downside

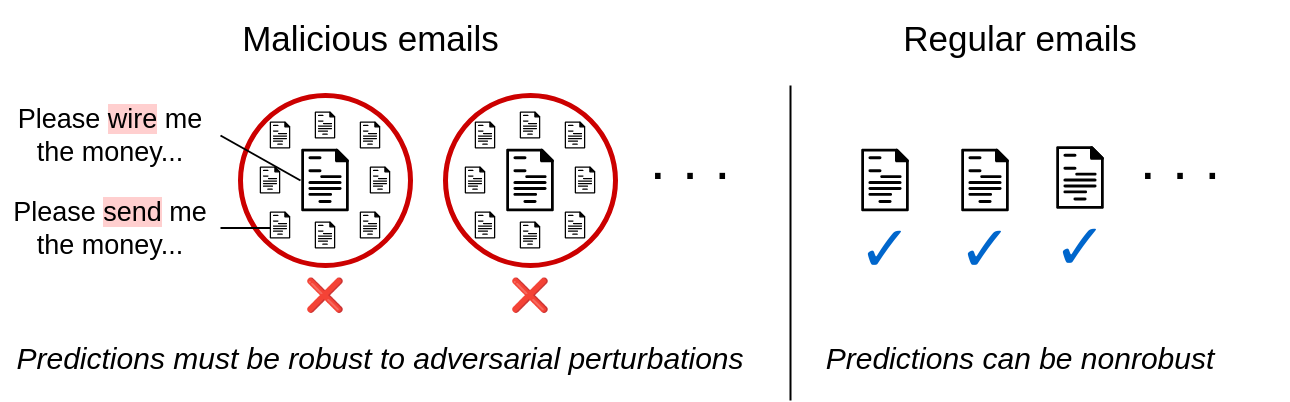

At present provably sturdy classifiers can produce credentials for inputs belonging to any class. This vary is just too broad for a lot of real-world adversarial purposes. Contemplate the illustrative case of somebody writing a phishing rip-off e-mail whereas attempting to keep away from spam filters. This adversary is at all times attempting to trick spam filters into considering their spam is benign – and vice versa. in different phrases, The attacker is just attempting to introduce false positives from the classifier. Related settings embrace malware detection, pretend information flagging, social media bot detection, medical insurance claims filtering, monetary fraud detection, phishing web site detection, and extra.

Determine 2. Uneven robustness in e-mail filtering. Sensible adversarial settings often solely require robustness certification for one class.

These purposes all contain binary classification settings, one in every of which delicate class What the adversary is attempting to keep away from (for instance, the “spam” class).This raises the next questions Robustness of uneven authentication, which goals to offer provably sturdy predictions for inputs in delicate classes whereas sustaining excessive accuracy for all different inputs. We offer a extra formal downside assertion in the primary textual content.

characteristic convex classifier

we propose Function Convex Neural Community Remedy the issue of uneven robustness. This structure consists of a easy Lipschitz steady characteristic map ${varphi: mathbb{R}^d to mathbb{R}^q}$ and a realized enter convex neural community (ICNN) ${g: mathbb {R}^q to mathbb{R}}$ (Determine 1). ICNN enforces convexity from the enter to the output logit by combining ReLU nonlinearity with a non-negative weight matrix. Because the binary ICNN determination area consists of a convex set and its complement, we add a brand new pre-combined characteristic map $varphi$ to permit non-convex determination areas.

The characteristic convex classifier can rapidly calculate the delicate class authentication radius for all $ell_p$-norms. Exploiting the truth that the convex operate is globally underapproximated by any tangent aircraft, we are able to receive the licensed radius within the intermediate characteristic area. This radius is then propagated to the enter area by way of Lipschitzness. The uneven setting right here is essential as a result of the structure solely generates credentials for the optimistic logit class $g(varphi(x)) > 0$.

The ensuing $ell_p$-norm licensed radius components is especially elegant:

[r_p(x) = frac{ color{blue}{g(varphi(x))} } { mathrm{Lip}_p(varphi) color{red}{| nabla g(varphi(x)) | _{p,*}}}.]

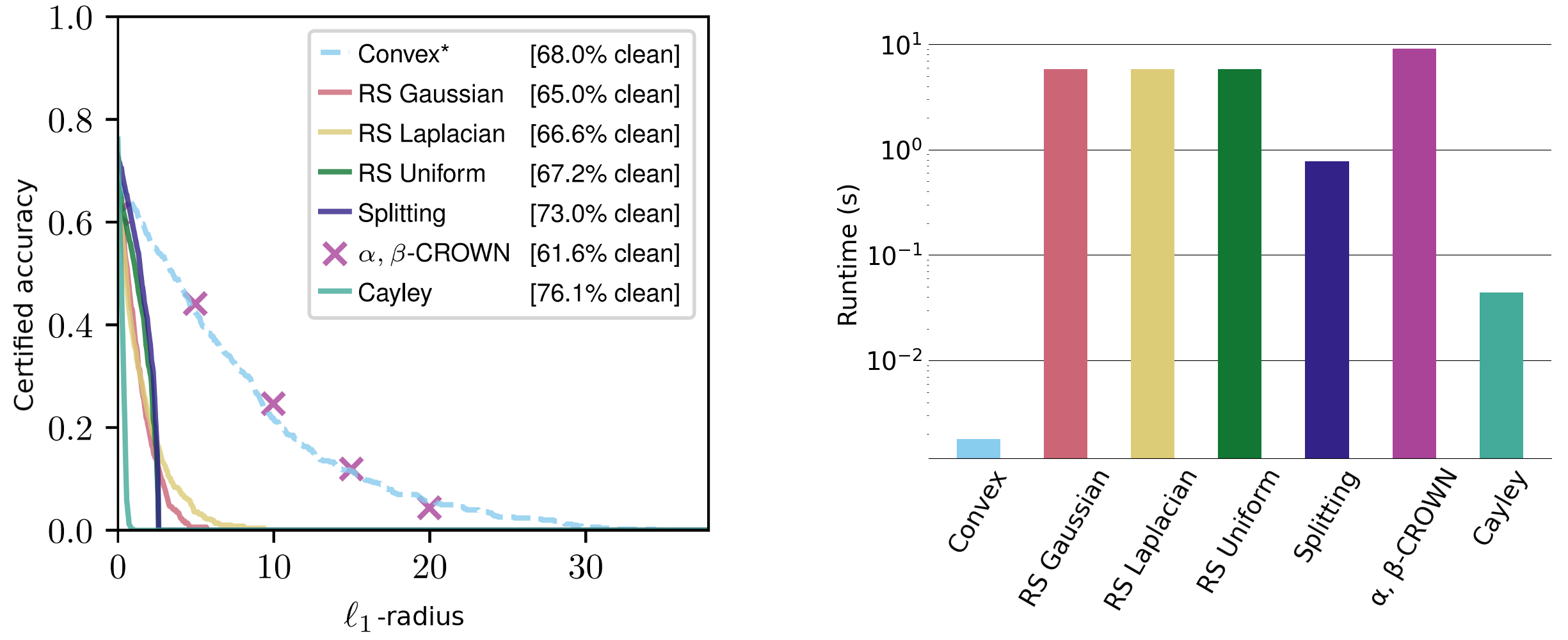

The non-constant time period is simple to clarify: radius and Classifier confidence and with Classifier sensitivity. We consider these certificates on a variety of datasets, acquiring aggressive $ell_1$ certificates in addition to comparable $ell_2$ and $ell_{infty}$ certificates – though different strategies are sometimes tailor-made to particular specs and Requires orders of magnitude extra operating time.

Determine 3. Delicate class authentication radius of $ell_1$ norm on CIFAR-10 cat and canine information set. The operating instances on the fitting are averaged over the radii $ell_1$, $ell_2$ and $ell_{infty}$ (word logarithmic scaling).

Our voucher works for any $ell_p$-norm and is closed-form and deterministic, requiring solely a single ahead and backward cross per enter. These will be calculated in milliseconds and scale nicely with community dimension. Compared, present state-of-the-art strategies equivalent to stochastic smoothing and interval sure propagation usually require a number of seconds to confirm even small networks. Stochastic smoothing strategies are additionally inherently non-deterministic and their credentials solely maintain true with excessive likelihood.

theoretical dedication

Whereas preliminary outcomes are promising, our theoretical work reveals that ICNN has big untapped potential even with out characteristic maps. Though binary ICNN is restricted to studying convex determination areas, we present that there exists an ICNN that achieves excellent coaching accuracy on the CIFAR-10 cat and canine dataset.

reality. An enter convex classifier exists that achieves excellent coaching accuracy for the CIFAR-10 cat and canine dataset.

Nonetheless, our structure solely achieves a coaching accuracy of 73.4%$ with out characteristic maps. Though coaching efficiency doesn’t indicate check set generalization, this outcome reveals that ICNN is no less than theoretically able to attaining the fashionable machine studying paradigm of overfitting to the coaching information set. Due to this fact, we pose the next open inquiries to the sphere.

Open query. Study an enter convex classifier that achieves excellent coaching accuracy for the CIFAR-10 cat and canine dataset.

in conclusion

We hope that the uneven robustness framework will encourage novel architectures that may be licensed on this extra centralized setting. Our characteristic convex classifier is one such structure that gives quick, deterministic authentication radius for any $ell_p$ norm. We additionally deal with the open downside of overfitting the CIFAR-10 cat and canine coaching dataset utilizing ICNN, and we present that that is theoretically attainable.

This text is predicated on the next papers:

Robustness of uneven authentication by way of feature-convex neural networks

PuffermerBrendan G. AndersonJulien Piet, Somayeh Sojoudi,

thirty seventh Convention on Neural Data Processing Techniques (NeurIPS 2023).

Extra particulars can be found on arXiv and GitHub. If our paper impressed your work, please take into account citing it by:

@inproceedings{

pfrommer2023asymmetric,

title={Uneven Licensed Robustness by way of Function-Convex Neural Networks},

writer={Samuel Pfrommer and Brendon G. Anderson and Julien Piet and Somayeh Sojoudi},

booktitle={Thirty-seventh Convention on Neural Data Processing Techniques},

yr={2023}

}